DoD is now advancing into the third generation of information technologies. This progress is characterized by migration from an emphasis on server-based computing to a concentration on the management of huge amounts of data.

This calls for technical innovation and for abandonment of primary dependence on a multiplicity of contractors because interoperable data must be now accessed from most DoD applications. In the second generation DoD depended on thousands of custom designed applications, each with its own database. The time has now come to view DoD as an integrated enterprise that requires a unified approach. DoD must be ready to deal with attackers who have chosen corrupt DoD widely distributed applications as a platform for waging war.

When Google embarked on indexing the world’s information, which could not yet be achieved, the company had to innovate how to manage uniformly its global data platform on millions of servers in more than 30 data centers. DoD has now embarked on creating a Joint Information Environment (JIE) that will unify access to logistics, finance, personnel resources, supplies, intelligence, geography and military data. When huge amounts of sensor data are included, the JIE will be now facing two to three orders of magnitude greater challenges how to organize the third generation of computing.

JIE applications will have to reach across thousands of separate databases that will support applications that will fulfill the diverse needs of an interoperable Joint service. Third generation systems will have to support millions of desktops, laptops and mobile networks responding to potentially billions of inquiries that must be assembled rapidly and securely.

The combined JIE databases will certainly exceed thousands of petabytes. JIE will have to manage, under emergency conditions all of the transactions per day with 99.9999% reliability. Even a very small security breach would be dangerous because a single critical event my slip by unnoticed. 0.0001% of a billion is still a potential 1,000 flaws.

The principal firm that comes close for making a comparison with DoD is the General Electric Company. It has a staff of over 300,000. It maintains and operates complex capital equipment such as aircraft, electric generators, trains and medical equipment. GE manages a long supply chain whereas none of the consumer-oriented applications that DoD has studied require that. DoD should not be compared with consumer cloud firms such as Google, Yahoo, Facebook and others because these firms deliver only a limited set of applications. For instance Amazon offers only a proprietary IaaS service that supplies computing capacity but not applications. DoD should be therefore compared with organizations where IT must satisfy a large and highly diversified constituency of diverse and global people.

DoD operates the world’s greatest collection of industry-sourced equipment such as tanks, helicopters, submarines and ships. It just happens that the General Electric is already migrating its information technologies into the third generation of computing. Therefore we can learn a great deal from their progress.

General Electric has had to learn how to do three things. First, it had to acquire the capacity to operate with much larger data sets. That includes multiple petabytes of data, which is necessary because the capacity of the existing relational databases is limited. Second, it had to adopt a culture of rapid application development. With most of the data management, communications and security code already provided by the platform-as-a-service (PaaS) infrastructure, a new programmer should be able to produce usable results on the first day of work. Third, GE is now re-focusing on the “Internet of Things” (IoT). This includes billions of objects such as spare parts, sensor inputs, medical diagnosis, equipment identification, ammunition, telemetry and the geographic location of all devices.

For instance, single drone flight generates over 30 TB of data about the conditions of the engines, maintenance statistics, repair data and intelligence. This information must be then attached to the planning, logistics as well as to the command and control systems. The amount of data that will be generated in the future of JIT will be several orders of magnitudes greater than what is captured nowadays. Ultimately, systems that include IoT will deliver hundreds of billions of transactions that will be producing a flood of information that will have to be screened and analyzed. Therefore DoD systems will have to be changed not only for looking at data at rest but also to examine incoming transaction dynamically in real time.

Meanwhile DoD will also have to reduce the second-generation and even first-generation applications in order to find the funds needed to support third generation innovations. This can be achieved through dramatic consolidation of applications that take advantage of the large operating cost reductions available through virtualization. The economics of cost reductions will have to be balanced against reliability and security. Such innovations will be expensive unless they are developed under a tightly enforced common systems architecture.

Second generation applications will not have to be rewritten but can be included, alongside with third-generation applications, in a PaaS environment that makes it possible to exchange data to satisfy incoming random queries. To reduce costs while making applications interoperable will require proceeding with a massive consolidation of hundreds of existing data centers. The recent introduction of the software defined data environment (SDN) will make that possible. SDN allows the sharing of the costs of computing, communications and security. It can cut costs while increasing redundancy while delivering superior reliability.

In planning the transition into third generation computing the new platform will have to rely on open-source solutions because DoD must be able to move applications from private clouds to public clouds and vice-versa as the need arises. Ultimately, DoD will end up housing most of its critical applications in private clouds, while retaining options for using public clouds for lower security applications such as finance, human resources and health administration.

The more data can become accessible from any of the billions of inquiries, the greater will be the utility of a shared data platform. There is no question that DoD, like GE, will have to start converting existing databases from stand-alone relational solutions to recently available “Big Data” software. Under such conditions thousands of secure processing “sand boxes” will allow the storage of data at multiple locations for rapid restoration of operations when failure occurs. This will allow the access to applications from any source that has access privileges.

The adoption of third generation computing must overcome the difficulties that arise from the projected increase in the volume and complexity the DoD systems. That cannot be achieved by reliance entirely on increasing the numbers and the quality of existing staffs because that is not affordable. Although new cloud software will increase the productivity of network control staffs, the workload caused by increased malware attacks will make it necessary to invest in a far greater automation of all controls.

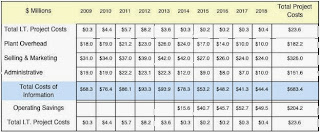

The 2014 DoD IT budget is $34.1 billion. $24.4 billion (72%) is for ongoing operations. This leaves $9.7 billion (28%) for meeting new functional requirement while leaving little for innovation. How to allocate funds in preparation for third generation computing?

There is no question that the consolidation of over 3,000 applications should be the first priority in freeing funds from ongoing operations so that money is available for new development and innovation. There are a variety of models available to simulate the potential benefits from virtualized computing. This technology is mature. It can be applied by seasoned staffs and can be implemented rapidly. 20 to 40% cost reductions have been verified, with break-even points reached in less than two years. Therefore the major obstacle is not technological but organizational.

As ongoing operations reduce costs through efficiencies, the spending on new development and particularly on third generation innovations should rise from 28%. Although cost reductions are essential and tactical, the DoD strategic budget should be evaluated primarily on the share of money that can deployed for third generation innovations.

In 1992 the DMRD 918 set the directions for operating cost reductions through consolidation. The expected savings were never achieved in the absence of strong directions from the Office of the Secretary. New expenses were permitted that far exceeded any cost cuts. As the third generation of computing has already arrived, the lesson learned from the past is to allow the DoD CIO to manage the deployment of the entire IT budget. Sufficient funds must become available produce the essential innovations.