http://www.securityweek.com/cyber-war-and-threat-boomerang-effect

Cyber weapons may be cheaper to make than tanks and nuclear arms, but they come with a dangerous caveat – once they are discovered, the target-er can become the targeted.

At Kaspersky Lab's Cyber Security Summit today in New York City today, the pros and cons of developing cyber-weapons such as Stuxnet and Duqu – and how their use can impact corporate environments – was front and center.

While it may not be possible to disassemble and reassemble a cruise missile after it is used, that is entirely possible when it comes to cyber-weapons, Kaspersky Lab CEO Eugene Kaspersky observed in a panel discussion.

"That," Kaspersky said, "is why my point is that a cyber-weapon is extremely, extremely dangerous…the victims will learn, and maybe they will send this boomerang back to you."

From his seat on the panel, Howard Schmidt, who served as the cyber-security coordinator for the Obama administration for three years, compared the situation to a passage from Sun Tzu's famous book, 'The Art of War.'

"You would never want to use fire in a battle if the wind's blowing in your face," Schmidt said. "That just makes sense. The second thing you want to do, if indeed you want to use fire and the wind is blowing in your face, you'd better hope you have nothing that will catch fire. The third thing is if you have something that catches fire, it better not be important to you."

"When we look at the pieces of malware out there that are being pushed around, a government may say 'this is a very, very well-crafted, very specific piece of malware designed to do something very specific.' To believe that's going to stay there and never ever be discovered, never ever be reverse engineered…that's just foolhardy," he said. "So what happens is you are playing with fire."

The bottom line, he concluded, is "why would you just sort of throw that out there and hope that it doesn't come back and hit you? Those are the things we really, really have to, on a nation state level, start to think about it."

Their commentary comes not long after the publication of 'Red October', a cyber-espionage attack that successfully compromised computer systems at diplomatic, government and scientific research organizations during a five-year period. No proof has been provided that it was government-sponsored. However, there have been widespread reports during the past two years that other malware, such as Stuxnet, was linked to efforts by the U.S. and Israel to sabotage Iran's nuclear ambitions.

Fighting the cyber war in some ways is akin to dealing with money laundering, Schmidt said, recalling that in the past many governments either participated in money laundering or looked the other way. Others however decided to try to crackdown on it. Likewise, some countries are reluctant to crack down on hackers whose activities benefit their economy, he said.

Operation Aurora – the cyber attack publicized by Google in 2010 – prompted the general acceptance of the fact that countries were perpetrating cyber attacks, Costin Raiu, director of the global research and analytics team at Kaspersky Lab, said during a presentation on the threat landscape for corporations. It was also proof that not all attacks were governments targeting governments – instead it was governments targeting companies.

He also noted that in the case of cyber-war, there can be collateral damage. In an example of this is Chevron, which disclosed in 2012 that some of its systems had been infected with Stuxnet in 2010.

While all corporations face a level of risk associated with cyber-attacks, some industries are more aware of the danger than others – principally because they have been hit harder by high-profile attacks, Kaspersky said.

"Those that have been a victim, you can guarantee at the next board meeting this was an agenda item," Schmidt said. "If they're good, not only was it an agenda item in the direct aftermath but…(now) every time there's a board meeting it will be on the agenda."

Search This Blog

Software Defined Networking - A New Network Weakness?

http://www.securityweek.com/software-defined-networking-new-network-weakness

Network virtualization, under the umbrella of Software Defined Networking (SDN), presents an opportunity for network innovation but at the same time introduces a new weakness which will more than likely be targeted once solutions become more commercially available.

Whether the underlying technologies used are OpenFlow or Overlay Virtual network, network virtualization solutions are based on a network controller which can be attacked in different ways. A successful attack on the controller will neutralize the entire network operation for which the controller is responsible – it can be said that there will be a new type of attack that will put the entire network operation under denial of service conditions.

A little background on software defined networking (SDN) which includes within it the overall trend of network virtualization and openflow:

OpenFlow/SDN challenges the basis of the old style networking, and suggests a completely new approach – a centralized algorithm and intelligence, rather than a distributed one (distributed algorithms are typically executed concurrently with separate parts of the algorithm having limited information about what the other parts of the algorithm are doing). This centralized approach leads to a democratization of networks, which means that anyone who wants to control the network could do so through programming, using an abstraction layer as the network operating system (Network OS).

The central network control entity is an essential part of the new SDN solutions and brings with it many advantages over the traditional way networks are handled today (you are welcome to read more about it in one of my recent blogs and column “network apps” and “Secure SDN” )

Having said this, the network controller presents a new weakness that can be the target of an attack. In order to illustrate the security issue here’s a simple example for the case of an openflow enabled network:

The basic openflow principle requires that each packet which represents a new “flow” (e.g, typical TCP flow, L3 IP level flow etc.) that enters the openflow network to be sent to the network controller. The controllers will calculate the best path this flow should be routed through and will distribute this knowledge, in the form of flow table entries to all OpenFlow enabled routers and switches in the network. Once this is done, further packets that are associated with the same flow will be routed through the network without any further involvement on the part of the controller.

The SDN, and more specifically OpenFlow technology, allows to define through software in the network controller what the network “flow” is. It can be a typical TCP connection or a pair of source and destination IP addresses, range of IPs, protocol type, etc.

Understanding this process reveals two main security weaknesses that are associated with new types of denial of service attacks:

• The data path infrastructures, i.e., the OpenFlow enabled switches and routers can now be a target of “flow table” saturation attacks.

• The controller entity can be flooded with packets that represent a new flow in rate that it cannot process – leading it to be in a DoS condition that “refuses” to let the new flow enter the network or reach their destinations (each flow can of course represent a new online business transaction or any other type of communication.)

As this is pretty basic and straightforward, I don’t want to give too many ideas to attackers. But, I would give one scenario of an attack on such SDN infrastructure that is supported by openflow:

1. An attack activates a new network scanner that generates legitimate traffic in the openflow supported network

2. The network scan tool is designed to reveal the “routing logic” that the controller was programmed to enforce and the definition of a “flow” in the network, i.e., is it a TCP connection, is it just a pair of IP addresses etc.

3. Once the “flow” definition is known to the attacker, the attacker can produce a high rate of traffic that generates new “flow” until it reaches the network controller capacity and puts the entire network in DoS conditions.

Network virtualization, under the umbrella of Software Defined Networking (SDN), presents an opportunity for network innovation but at the same time introduces a new weakness which will more than likely be targeted once solutions become more commercially available.

Whether the underlying technologies used are OpenFlow or Overlay Virtual network, network virtualization solutions are based on a network controller which can be attacked in different ways. A successful attack on the controller will neutralize the entire network operation for which the controller is responsible – it can be said that there will be a new type of attack that will put the entire network operation under denial of service conditions.

A little background on software defined networking (SDN) which includes within it the overall trend of network virtualization and openflow:

OpenFlow/SDN challenges the basis of the old style networking, and suggests a completely new approach – a centralized algorithm and intelligence, rather than a distributed one (distributed algorithms are typically executed concurrently with separate parts of the algorithm having limited information about what the other parts of the algorithm are doing). This centralized approach leads to a democratization of networks, which means that anyone who wants to control the network could do so through programming, using an abstraction layer as the network operating system (Network OS).

The central network control entity is an essential part of the new SDN solutions and brings with it many advantages over the traditional way networks are handled today (you are welcome to read more about it in one of my recent blogs and column “network apps” and “Secure SDN” )

Having said this, the network controller presents a new weakness that can be the target of an attack. In order to illustrate the security issue here’s a simple example for the case of an openflow enabled network:

The basic openflow principle requires that each packet which represents a new “flow” (e.g, typical TCP flow, L3 IP level flow etc.) that enters the openflow network to be sent to the network controller. The controllers will calculate the best path this flow should be routed through and will distribute this knowledge, in the form of flow table entries to all OpenFlow enabled routers and switches in the network. Once this is done, further packets that are associated with the same flow will be routed through the network without any further involvement on the part of the controller.

The SDN, and more specifically OpenFlow technology, allows to define through software in the network controller what the network “flow” is. It can be a typical TCP connection or a pair of source and destination IP addresses, range of IPs, protocol type, etc.

Understanding this process reveals two main security weaknesses that are associated with new types of denial of service attacks:

• The data path infrastructures, i.e., the OpenFlow enabled switches and routers can now be a target of “flow table” saturation attacks.

• The controller entity can be flooded with packets that represent a new flow in rate that it cannot process – leading it to be in a DoS condition that “refuses” to let the new flow enter the network or reach their destinations (each flow can of course represent a new online business transaction or any other type of communication.)

As this is pretty basic and straightforward, I don’t want to give too many ideas to attackers. But, I would give one scenario of an attack on such SDN infrastructure that is supported by openflow:

1. An attack activates a new network scanner that generates legitimate traffic in the openflow supported network

2. The network scan tool is designed to reveal the “routing logic” that the controller was programmed to enforce and the definition of a “flow” in the network, i.e., is it a TCP connection, is it just a pair of IP addresses etc.

3. Once the “flow” definition is known to the attacker, the attacker can produce a high rate of traffic that generates new “flow” until it reaches the network controller capacity and puts the entire network in DoS conditions.

How Efficient is the Management of DoD Enterprise Systems?

In March 2012 the GAO delivered to the Congressional Committee on Armed Services a report on Enterprise Resource Planning (ERP) Systems. It included ten systems with total estimated current costs of $22.7 billion [General Fund Enterprise Business System (GFEBS); Global Combat Support System (GCSS); Logistics Modernization Program (LMP); Integrated Pay and Personnel System (IPPS); Enterprise Resource Planning System (ERP); Global Combat Support System-Marine Corps (GCSS); Defense Enterprise Accounting and Management System (DEAMS); Expeditionary Combat Support System (ECSS); Integrated Personnel and Pay System (AF-IPPS) and Defense Agencies Initiative (DAI)].

The ERPs would be replacing legacy systems costing $0.89 billion/year. Replacing such systems would take anywhere from seven to fourteen years. When the ERPs are finally installed they would cost up to $207,561 per user and have a payback as high as 168 years.

The primary reason for building the new ERPs is to modernize the interfaces with 560 legacy systems already in place and connecting with 1,217 existing applications to meet changing requirements.

The projected payback years exceed the life expectancy of technologies in every case. That makes these investments questionable.

The above table above is incomplete. For instance the Navy’s NEXTGEN is excluded even though it is projected to take 11 years and cost over $40 billions. There are also enterprise applications, such as the Defense Integrated Military Human Resources System (DIMHRS), which was aborted after spending over a billion dollars.

Much can be learned from an examination of the GAO report to find out how DoD invests in multi-billion projects:

1. The project implementation time always exceeds commercial practices. DoD pursues a sequential phase approach for planning, design, coding and implementation, which means that during each phase time-consuming agreements must be negotiated about interfaces.

2. Elongated project duration contributes to rising obsolescence. Program management is continually involved in negotiations about 1,217 interfaces with stakeholders while features are changing. This is increases costs and delays schedules.

3. The costs of every ERP continue rise as the alteration of requirements dictates revisions that propagate well beyond the scope of any ERP.

4. The non-standard user interfaces for each ERP require large investments in training and education. That is excluded from total costs because budgets do not include the payroll of military and civilian personnel.

5. Each ERP system has its own infrastructure. Each obsolete legacy systems must be custom-fitted into the changed ERP.

If DoD would adopt a standard Platform-as-a-Service (PaaS) model for every ERP it could offer for all connections a standard application program interfaces. Programs could then interact with the ERPs so that the developers can code sub-systems for connectivity using interchangeable interfaces. The adoption of such approach would materially reduce redundant work, cut costs and allow building of ERP’s incrementally.

The current approach to implementing ERPs is not working. The project time line is too long. That can be shortened only by adoption of a standard PaaS environment. To maintain interoperability during the transitions from legacy to new ERP applications the DoD CIO should impose standards that comply with open source formats. This would avoid spending money on tailor-make custom designs.

The new approach to the design of ERP software must rely on central direction how all programs can be executed. It also requires control of shared data definitions. Current inconsistencies in data formats impose a huge cost penalty. New efforts to start an ERP would have to be guided by strong direction that guides architecture, choice of cloud software and network design. Without such guidance the current efforts to complete stand-alone ERPs will cost too much and deliver results too late.

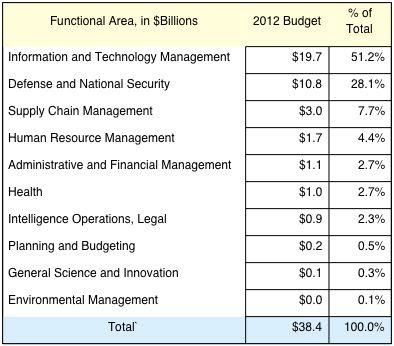

What is the Efficiency of DoD IT Spending?

Any aggregation of computers, software and networks can be viewed as a “cloud”. DoD is actually a cloud consisting of thousands of networks, ten thousands of servers and millions of access points. DoD FY12 spending for information technologies is $38.4 billion. In addition IT includes the costs of civilian and military payroll as well as most IT spending on intelligence. The total DoD cloud could be over $50 billion, which is ten times larger than the budget of the ten largest commercial firms. The question is: how efficient is DoD in making good use of its IT?

Efficiency of any system is defined as the ratio of Outputs to Inputs, also known as the productivity ratio of any enterprise. If only a small fraction of Inputs is converted to Outputs then IT can be labeled as inefficient. The metric of the productivity ratio is always evaluated in dollars. Such numbers are readily available for DoD because the Office of Management and Budget (OMB) publish analyses of IT costs every year.

To make Output/Input evaluations require finding out how much of the available total IT budget is consumed in “management”, defined as “…costs incurred in the general upkeep or running of a plant, premises, or business, and not attributable to specific products or results.”

OMB lists the “information and technology management” function for IT. This includes all planning, administrative, management and acquisition costs as well as communications costs that cannot be attributed to any specific output.

This tabulation shows that only 48.8% of DoD functions are related to the costs of output. The remaining 51.2% is attributed to “management”, which includes expenditures for CIOs of OSD and components staffs. The IT Ouput/Input ratio for DoD can be estimated to be less than a half.

Is the 48.8% ratio a good measure of IT performance? Can it be compared with the best commercial practices?

I have worked with productivity numbers for more than thirty years. Have published over hundred articles and books on this topic and own a Registered Trade Mark on Information Productivity. In terms of IT spending per capita DoD is most comparable to the financial services sector because of its large amount of purchase transactions and huge assets. On the basis of comparison with major banks, whose IT budget for the top firms averages $2 billion, the IT productivity has always shown a ratio between 70% and 80% in contrast with less than 50% for DoD.

The primary reason for the difference between commercial firms and DoD are the expenditures for IT infrastructure maintenance ($7.7 billion) and for IT information security ($2.8 billion). These two items account for more than half of the communications expense that is included in the management costs of DoD.

With an estimated number of 15,000 networks the first priority for any future cost reductions should be the consolidation of communications. According to a 2006 GAO report the Global Information Grid (GIG) was supposed to achieve major reductions in the number of networks. That has not happened.

DoD must restructure its IT communication operations from an environment where it is vulnerable to multiple cyber attacks. Cutting down on the number of networks requires shifting of computing to a much smaller number of enterprise clouds. That will reduce costs as well as increase security.

Enterprise E-Mail and Collaboration Application for DoD?

E-mail and collaboration (E&C) is the most attractive first step that leads to the realization of DoD enterprise-wide systems. E&C features are generic. They are functionally identical for everyone. Creating a shared directory of addresses and implementing security is well understood. Implementing E&C, as a Software-as-a-Service (SaaS), offers huge cost reductions. For instance, Microsoft Office 365 offers cloud-based E&C for $288/seat/year. Google offers a wide range of E&C services plus applications for $50/seat/year.

The only comparable cost for a similar service is the Navy Marine Corps Internet (NMCI), which is primarily targeted for the handling of E&C. The projected replacement cost is $2.9 billion/year or $7,700 per seat. Though the services offered by the replacement are not strictly comparable with a SaaS solution, the gap between a cloud system and the Navy proposal is too large to be overlooked. The life-cycle cash flow difference is in tens of billions.

If DoD could standardize on a secure E&C cloud additional enterprise-wide efforts would follow. The question is whether DoD should implement E&C by upgrading the current Navy operated environment, or to embark on a totally new direction using SaaS that runs on a secure Internet.

There has been already a good example of the successful migration of 80,000 GSA employees to the Google cloud. GSA simply disconnected from legacy e-mail and replaced it with a completely new low-risk service that delivers short-term net savings.

Army has now started moving its E&C to DISA. When attempting to take over DISA found that the existing network was polluted with inconsistencies. Local operators were acting as if they owned all applications by adding features and attachments. As an example, locations had improperly configured firewalls. Contractors applied unpatched software versions. Different circuit cards could not be synchronized. Consequently, local systems would not be interoperable when consolidated. Local variations had to be modified before systems could relocate into a unified environment. The Army conversion to an enterprise E&C has now been halted to clean up existing systems. The current choice is to migrate local versions to centrally administered server-farms.

Meanwhile, Congress has asked for an Army solution that would also fit Air Force, Navy and the Marines. That is an enormously demanding requirement, which calls for policy-level directions from the DoD CIO.

Standardizing e-mail for DoD includes a long list of add-ons such as application code security at NIPR and SIPR levels, provisions for archiving of all messages as well as assured back ups of transactions. All of these will have to be standardized, if enterprise system interoperability can deliver major cost reductions. Unfortunately, a wide variety of such add-ons are already code-embedded into the DoD servers and desktops. Existing systems are also integrated into Microsoft solutions where they would not qualify as an open source solution.

The effort to unify DoD e-mail has also run into integration problems for mobile devices. Interfacing with Android, Microsoft and Apple smart phones limits acquisition choices. Therefore, new policy-level directives will have to be issued that dictate which devices are allowed to connect to the network.

With rising pressure to reduce IT spending, there is an interest in considering centrally procured off-the-shelf commercial SaaS applications instead of proceeding with extremely expensive incremental migrations from the legacy environment.

Replacing all of the existing E&C with a single SaaS would accelerate the progression towards enterprise systems. Vendors would be able to compete for the lowest cost services without the hurdle of cross-platform conversion. Local modifications to support specific needs could be then bolted on by the Army, Air Force, Navy and Marine Corps as long as open source application interfaces would be followed.

The critical issue in organizing enterprise e-mail and collaboration concerns the accountability for managing a shared computing environment for DoD. What is emerging is a shift of oversight from local CIOs to the operational accountability by the Cyber Command. Local CIOs could then concentrate on accelerating progress to catch up with commercial practices.

DISA is now proceeding with the implementation of the Army’s E&C. Progress is watched to see whether the 1992 goal of making DISA an enterprise services utility can be realized.

DoD Has an New Information Systems Strategy?

We have now the “DoD IT Enterprise Strategy and Roadmap” (ITESR_6SEP11). The DoD Deputy Secretary

and the Chief Information Officer signed it. This makes the document the

highest-level statement of IT objectives in over two decades. The new direction

calls for an overhaul of policies that guide DoD information systems. Implementation

becomes a challenge in an era as funding for new systems development declines.

The following illustrates some of the issues that require

the reorientation of how DoD manages information technologies:

1. Strategy: DoD

personnel will have seamless access to all information, enabling the creation

and sharing of information. Access will be through a variety of technologies,

including special purpose mobile devices.

Challenge: DoD

personnel uses computing services in 150 countries, 6,000 locations and in over

600,000 buildings. This diversity calls for standardization of formats for ten

thousands of programs, which requires a complete change in the way DoD systems

are configured.

2. Strategy: Commanders

will have access to information available from all DoD resources, enabling improved

command and control, increasing speed of action and enhancing the ability to

coordinate across organizational boundaries or with mission partners.

Challenge: Over

15,000 uncoordinated networks do not offers availability and latency that is

essential for real-time coordination of diverse sources of information. Integration of all networks under centrally

controlled network management centers becomes the key requirement for further

progress. Requires a complete reconfiguration of the GIG.

3. Strategy: Individual

service members and government civilians will be offered a standard IT user

experience, enabling them to do their jobs and providing them with the same

look, feel, and access to information on re-assignment, mobilization, or

deployment. Minimum re-training will be necessary since the output formats,

vocabulary and menu options must be identical regardless of the technology

used.

Challenge: DoD

systems depend on over seven million devices for input and for display of

information. Presently there are thousands of unique and incompatible formats

for the supporting user feedback to automated systems. The format

incompatibilities requires the replacement of the existing interfaces by means

of a standard virtual desktop, which recognizes the differences in training and

in literacy levels.

4. Strategy: Common

identity management, authorization, and authentication schemes grants access to

the networks based on a user’s credentials as well as on physical circumstances.

Challenge: This calls

for the adoption of universal network authorizations for granting access

privileges. This requires a revision of how access permissions that are issued

to over 70,000 servers. The workflow between the existing personnel systems and

the access authorization authorities in human resources systems will require

overhauling how access privileges are issued or revoked.

5. Strategy: Common

DoD-wide services, applications as well as programming tools will be usable

across the entire DoD thereby minimizing duplicate efforts, reducing the

fragmentation of programs and reducing the need for retraining when developers

are reassigned or redeployed.

Challenge: This policy

cannot be executed without revising the organizational and funding structures in

place. Standardization of applications and of software tools necessitates

discarding much of the code that is already in place, or temporarily storing it

as virtualized legacy codes. Reducing data fragmentation requires a full implementation

of the DoD data directory.

6. Strategy: Streamlined

IT acquisition processes to deliver rapid fielding of capabilities, inclusive

of enterprise-wide certification and accreditation of new services and

applications.

Challenge Presently

there are over 10,000 operational systems in place, controlled by hundreds of

acquisition personnel and involving thousands of contractors. There are 79

major projects (with current spending of $12.3 billion) that have been ongoing

for close to a decade. These projects have proprietary technologies deeply

ingrained through long-term contract commitments. Disentangling DoD from several billions worth

of non-interoperable software requires Congressional approval.

7. Strategy: Consolidated

operations centers provide pooled computing resources and bandwidth on demand.

Standardized data centers must offer access and resources by using service

level agreements, with prices that are comparable with commercial practices. Standard

applications should be easily relocated across a range of competitive offerings

without cost penalty.

Challenge: The

existing number of data centers, estimated at over 770, represents a major

challenge without major changes in the software currently occupies over 65,000

servers. Whether this can be accomplished by shifting the workload to

commercial firms, but under DoD control, would require making tradeoffs between

costs and security assurance.

In summary, the redesign of operational systems into a

standard environment is unlikely to be implemented on a 2011-2016 schedule

unless DoD considers radically new ways of how to achieve the stated objectives.

Over 50% of IT spending is in the infrastructure, not in

functional applications. The OSD CIO has a clear authority to start directing

the reshaping of the organizations of the infrastructure. Consequently, the

strategic objectives can be largely achieved, but only with major changes in

the authority for the execution of the proposed plan. It remains to be seen

whether the ambitious OSD strategies will meet the challenge of the new cyber

operations.

Cutting IT Costs

The adoption of Platform-as-a-Service (PaaS) has now opened new IT cost reduction opportunities. This option must now enter into DoD planning.

In FY11 DoD spent 54% of its total IT budget of $36.3 billion on its infrastructure. The remainder was spent on functional applications. In comparison with commercial practices the size of the DoD infrastructure is excessive. DoD has never managed to share its infrastructures. Programs were built as stand-alone “silos”, each with its stand-alone infrastructure.

For instance, even the simple effort to consolidate what is supposed to be a commodity application, such as a common e-mail for the Army, has ran into problems. The Army e-mail consolidation is difficult because of no shared standards, numerous local network modifications, inconsistent versions of software and incompatible desktops. The idea of placing parts of supply chain management, human resource systems, financial applications or administrative systems on shared platforms is too hard.

A sharing of the operational infrastructure is now feasible with PaaS platforms. PaaS calls for the separation between the software that defines the logic of an application and the method that describes how that applications will be placed in a computing environment.

A PaaS cloud provisions data center assets, data storage capacity, communication connections, security restrictions, load balancing and all administrative requirements such as Service Level Agreements (SLAs). A PaaS cloud can be private or public. It can support local needs or serve global requirements. A system developer can then concentrate exclusively on authoring the application logic. When that is done, the code can be passed to the PaaS platform for the delivery of results.

PaaS produces results without the cost and complexity of managing operations. In this way the total budget for a new application can be reduced. Programmers can concentrate on the business logic, leaving it to PaaS to take care of the hard to manage infrastructure. If you use PaaS all of the infrastructure components will be already installed. A PaaS cloud can then support hundreds and even thousands of shared applications infrastructures. Consequently, the total cost of DoD operations will decrease.

PaaS is an attractive solution except that each provider of platforms will try to lock up applications into their environment. Once an application code is checked into a vendor’s PaaS it will be difficult to ever check it out. There are hundreds of vendors who add refinements to their PaaS so that any extrication to another PaaS will remain as a restraint.

What a customer wants is not a vendor lock-in, but the ability to port applications from any PaaS to another. You can then shop for different terms of service from multiple suppliers. Portability of application code across PaaS services makes price competition possible. Availability of multiple PaaS clouds also makes for more reliable uptime.

To deal with the problem of interoperability across different PaaS vendors, VMware has just introduced the PaaS platform, the cloud foundry. What is unique is that this is open source software. A number of firms have already signed up to support this approach. The only restriction is that all of the applications must conform to compatible software frameworks such as Spring for Java apps, Rails and Sinatra for Ruby apps and Node.js.

An open source cloud platform prevents vendor monopoly, it allows for competitive procurement, makes cross-cloud support available and offers the exercise of multiple options how services can be delivered. Such arrangement will assure customers about improved quality and maintainability.

In the next few years DoD will have to depend on the cloud technologies that are available from contractors. Cloud computing services are available from several hundred firms. This includes Google, Microsoft, Amazon, Rackspace, AT&T, Verizon and many others. According to the 2012 National Defense Authorization Act , DOD must migrate its data from government-administered cloud services, and instead use private-sector offerings “that provide a better capability at a lower cost with the same or greater degree of security.”

A DoD customer contracts for a PaaS platform offering with features that are desired. In effect, the PaaS vendor delivers data center services while the customer retains full control over the application software.

DoD policy of Ocober 16, 2009 provides guidance regarding the use of Open Source Software. Open Source Software (OSS) is software for which the human-readable source code is available for use, study, reuse, modification, enhancement, and redistribution by the users. VMware PaaS meets the definition of commercial computer software and must be given statutory preference.

The broad peer-review enabled by publicly available open source code supports software reliability and security efforts through the identification and elimination of defects that might otherwise go unrecognized. The unrestricted ability to modify software source code then enables DoD to respond more rapidly to changing situations, missions, and future threats which otherwise would be constrained by vendor licensing.

The availability of the cloud foundry opens a new approach how to proceed with the migration to cloud computing. The more reliable PaaS may not take over unless DoD will change its thinking how to organize the development and operations of IT.

NOTE: Originally published as "Incoming" in AFCEA Signal Magazine, 2012

A third of all malware is encountered in the U.S.

Legitimate sites and advertisements on the Web are much more

likely to deliver malware than "shady" sites, according to a new

study released Wednesday.

According to the Cisco

2013 Annual Security Report, the highest concentration of online security

sites does not come from "risky" sites such as pornography,

pharmaceutical, or gambling sites, but from everyday sites.

"In fact, Cisco found that online shopping sites are 21

times as likely, and search engines are 27 times as likely, to deliver

malicious content than a counterfeit software site," the study says.

"Online advertisements are 182 as times likely to deliver malicious

content than pornography."

The U.S. retains the top spot among countries where the most

malware is encountered, accounting for a third of all malware, the study says.

Russia was in the No. 2 spot with almost 10%; China dropped to less than 6%.

"Most Generation Y employees believe the age of privacy

is over (91%) and one third say that they are not worried about all the data

that is stored and captured about them," the study says. "They are

willing to sacrifice personal information for socialization online. In fact,

more Generation Y workers globally said they feel more comfortable sharing

personal information with retail sites than with their own employers' IT

departments."

SUMMARY

Young U.S. Internet users accept malware from conventional sources.

SOURCE: Dark Reading, January 2013

Computers That Understand What You Say

A smart-phone that engages in conversations is the next “Incoming” perturbation that will dictate how DoD will have to revise its information management practices.

DoD planners will have to include in their investment programs the availability of tactical consumer radios costing less than $300. The first firm to launch such technology is Apple with its iPhone 4S. It offers intelligent conversational capability. No other consumer computer firm has ever offered that before. We can be sure that other vendors will follow with similar products.

The iPhone 4S device is the first device that offers a reasonably capacity to perform natural language processing using semantic methods. Apple has applied computational linguistics to make it possible for the conduct of unstructured verbal and text exchanges to take place between computers and humans.

The application that does that is called SIRI. It depends for its capacity to talk back on semantic software that depends on its linguistic capability by extracting the meaning of word from the Apple cloud. Though SIRI still has problems responding to unusual requests, there are now a huge number of programmers who are enhancing the vocabulary of interactions while SIRI keeps “learning” from millions of conversations.

Over the past 20 years there have been many attempts to endow computers with a conversational capability. This involved the use of complex and very expensive special purpose hardware and software. What makes SIRI different is its reliance on packaging into a combination of conventional as well as innovative features that makes it possible to engage in simple conversations. The shirt-pocket sized iPhone include not only fully featured e-mail, office applications, calendars and an unlimited number of business applications but also a camera, a video recorder, GPS, geography-tagging, a compass, a gyro, a proximity sensor as well as face identification features.

Apple packed into a 4.9 oz. device UMTS/HSDPA/HSUPA (850, 900, 1900, 2100 MHz); GSM/EDGE (850, 900, 1800, 1900 MHz); CDMA EV-DO Rev. A (800, 1900 MHz); 802.11b/g/n Wi-Fi (802.11n 2.4GHz only) as well as Bluetooth 4.0 wireless. This makes the iPhone a software-defined radio, which covers a spectrum of frequencies. It can be encrypted for security protection.

SIRI will talk in US and UK English (U.S.), Chinese (Simplified), Chinese (Traditional), French, French (Canadian), French (Switzerland), German, Italian, Japanese (Romaji, Kana), Korean, Spanish, Arabic, Catalan, Cherokee, Croatian, Czech, Danish, Dutch, Estonian, Finnish, Flemish, Greek, Hawaiian, Hebrew, Hindi, Hungarian, Indonesian, Latvian, Lithuanian, Malay, Norwegian, Polish, Portuguese, Portuguese (Brazil), Romanian, Russian, Slovak, Swedish, Thai, Turkish, Ukrainian, Vietnamese.

DoD planners can view the iPhone 4S as a harbinger of a revolutionary new approach how people will interact in the cyber sphere. Other manufacturers will be entering into a new technology race. The issue will be which of the many competing public clouds can support their respective devices with a superior capacity to conduct intelligent conversations without delays.

DoD information architecture will have to start adopting systems that will support person-centered applications. Though business applications may remain operating in the existing mode for a time, natural language applications should be focused on meeting the warfighter’s tactical needs. New systems should be able to offer the capacity to:

• To recognize the context of commands;

• To cope with inquiries that ask for summaries of complex data;

• Respond to silent texting, without keyboard inputs;

• Allow for terse communications about missions and objectives;

• Combine GPS, geography and intelligence information;

• Deliver situational awareness to individuals;

• Collect photo and video intelligence;

• Connect to diverse applications to obtain instant answers;

• Recognize diction characteristics of a sender;

• Use face recognition as means for biometric identification;

• Deal with multiple frequencies make it a software defined radio;

• Handle multiple languages for automatic translation of conversations;

• Track all communications and assign identity to an individual.

All of the linguistic intelligence of SIRI-like devices will remain, for several decades to come, on central clouds that house petabytes and even exabytes of semantic relationships. This must be available in real-time.

Semantic methods depend on an examination of millions of sentences to extract from communications relationships between the syntax of questions and the mostly likely context in which a word or a sentence have appeared before. This requires the uses of extremely fast parallel computers that will have to subdivide the task of finding the right answers.

To maintain a 100% reliable connection between local cell-phone devices and the central repository of semantic intelligence, DoD will have to depend on the availability of a multiplicity of “on the edge” servers. This is especially necessary in the case of deployment of expeditionary forces.

The availability of personal communicators that can hold conversations is a major breakthrough in the evolution of computing. Time has come for DoD planners to prepare for that. Intelligent communications will require different data centers and different networks.

NOTE: Originally published as "Incoming" in AFCEA Signal Magazine, 2012

Leaving Administrative Accounts Active on Routers

A network and security hardware vendor revealed it issued long overdue patches for eight of its product families to limit access to administrative accounts that could have allowed attackers to compromise the products.

The backdoor access could have given an attacker complete access to the devices, provided they knew the password—and possibly have stolen an encryption key.

The vendor did limit access to the backdoor features to certain ranges of Internet addresses, but the groups of addresses included a number of servers for other companies and individuals as well. Compromising those servers could have given an attacker the ability to access vulnerable networking hardware.

In secure environments, it is highly undesirable to use appliances with backdoors built into them, even if only the manufacturer can access them.

Our research has confirmed that an attacker with specific internal knowledge of a router appliances may be able to remotely log into a non-privileged account on the appliance from a small set of IP addresses. These vulnerabilities are the result of the default firewall configuration and default user accounts on the unit.

The controversy comes as corporations and national governments worry over the security of the networking products manufactured across the globe. In October, the U.S. government recommended that companies not use products from Chinese manufacturers Huawei and ZTE, for fear that the Chinese government might insert a backdoor into the products. In August, researchers presenting at the annual Defcon hacking conference found enough vulnerabilities in Huawei's routers to allow attackers to compromise the devices remotely.

In 2007, a series of vulnerabilities in Cisco's networking operating system would have allowed a knowledgeable attacker backdoor access to any product running the operating system. Last year, researchers found that a common embedded chip had backdoor functionality as well. In fact, one security professional estimated that 20 percent of consumer routers have backdoors as well as half all industrial control systems.

SUMMARY

It is a common error to leave administrative privileges to a router set at the vendor’s original access code. That creates a backdoor, often available from on-line maintenance manuals.

Distributed Denial of Service Extorts Ransom

In late 2011, trading services firm Henyep Capital Markets came under a distributed denial-of-service (DDoS) attack that disrupted many of the company's service portals. With the attack came a demand for ransom. The flood of packets that hit the company's trading services topped 35M bps, combining a variety of network traffic types and focusing on both overwhelming the network and overtaxing the firm's application servers.

Rather than acquiesce to the criminals' demands, Henyep hired the firm Prolexic.

The initial DDoS attack caused performance issues on multiple Henyep trading websites for 24 hours. Company management did not respond to the DDoS attackers’ demand for a ransom in exchange for ending the attack. The company’s mitigation engineers restored access to all services on the sites within minutes after routing traffic through Prolexic’s global scrubbing centers where malicious traffic was removed.

Prolexic protects Internet-facing infrastructures against all known types of DDoS attacks at the network, transport and application layers through four DDoS traffic scrubbing centers.

Prolexic DDoS mitigation engineers in the U.S. quickly identified the initial attack as a SYN floodfollowed by multiple GET floods. The attack campaign peaked at 35.30 Mbps (bits per second), 8.10 Kpps (packets per second), and 122.00 Kconn (connections per second) over two days. Prolexic mitigation engineers were monitoring the attacks and counteracting the perpetrator’s changing attack vectors throughout the campaign. As a result, the attackers were unable to take down the Henyep site, nor disrupt services despite the length of the attack.

Recently, DDoS attackers tried to take down Henyep’s trading operations again with a 30 MbpsICMP flood and GET flood without success due to Prolexic DDoS protection. Throughout 2012, Henyep, like many other financial services companies, has continued to be the target of DDoS attackers, but Prolexic’s DDoS mitigation services have prevented any downtime.

With bandwidth capacity in excess of 800 Gbps, Prolexic’s in-the-cloud DDoS protection transfers DoS and DDoS attacks that overwhelm others. A proven network of DDoS scrubbing centers are located in London, Hong Kong, San Jose, California and Ashburn, Virginia.

Our DDoS scrubbing centers are supported by four Tier 1 global telecommunications carriers. It means we Prolexic mitigates the largest DDoS attacks by substituting immense anti-DDoS bandwidth. It can provide DDoS protection services for multiple clients and fight multiple DDoS attacks at once.

When a DDoS attack is detected, our DDoS protection services are implemented within minutes. Upon activation of DDoS protection, a Prolexic customer routes in-bound traffic to the nearest Prolexic scrubbing center, where proprietary DDoS filtering techniques, advanced routing, and patent-pending anti-DoS hardware devices remove DDoS traffic close to the source of the botnet activity. Clean traffic is then routed back to the customer’s network.

Because Prolexic dedicates more bandwidth to DDoS denial of service attack traffic they can provide protection even agains the largest and most complex DDoS attacks. Prolexic uses over 20 DDoS mitigation technologies – many of them proprietary.

Prolexic mitigates every type of DDoS attack at every layer including Layer 3, 4, and 7. We even have a proven solution against encrypted attacks that vandalize HTTPS traffic in real time. Further, we use certified FIPS-140-2 Level 3 key management encryption tools with passive SSL decryption for extremely high performance.

Summary

DDoS attacks can be blunted and then eliminated through high bandwidth capacity networks. Often DDoS attackers who see traffic has been re-routed through our DDoS mitigation network immediately abandon their attacks.

Subscribe to:

Comments (Atom)